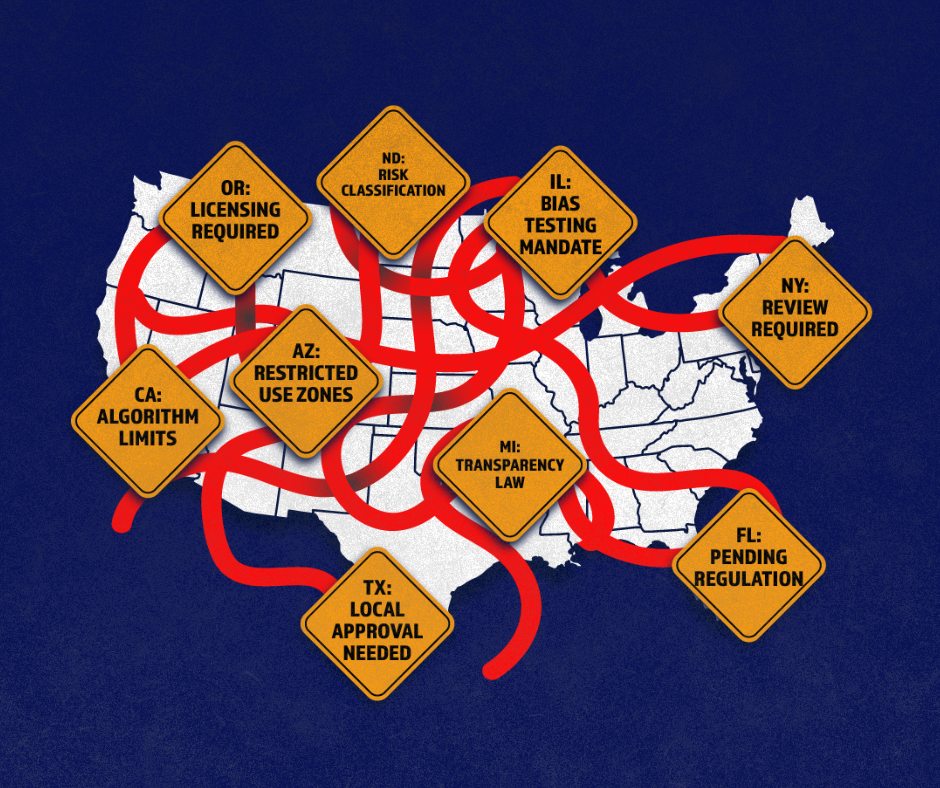

WASHINGTON, D.C. — Yesterday, President Donald Trump issued an executive order to address a wave of state and local legislation proposed nationwide to regulate AI. In 2025, more than 1,100...

Senator Jon Husted recently introduced the Children Harmed by AI Technology (CHAT) Act of 2025. The stated goal is clear: protect minors from harmful interactions with AI companion chatbots. The...

WASHINGTON, D.C. – The Consumer Choice Center is encouraged by the Trump Administration’s AI Action Plan, released today, which promises to revamp existing regulations and rules currently holding back tech innovation,...

A federal moratorium on state-level AI regulations isn’t some skull-crushing overreach; it’s a prudent way to stop bad local rules and unify eventual AI rules of the road. Consumers who...

Last week, an investigation by Reuters revealed that Chinese researchers have been using open-source AI tools to build nefarious-sounding models that may have some military application. The reporting purports that...

February 5, 2024 – On February 2, the European Union’s ambassadors green lit the Artificial Intelligence Act (AI Act). Next week, the Internal Market and Civil Liberties committees will decide...